A few years back I received that dubious American honor of being selected for a second degree murder jury trial. I picked up an absolutely great selection of quotes I noted down for posterity, as well as a lingering distaste for prosecutorial abuse of power, but perhaps the most interesting thing I took away from it was a sense of how the jury process can be compared to game design.

Specifically, very, very, very bad game design.

At its core, game design involves the creation of certain rules and structure intended to induce the player to accomplish a specified goal in an effective manner.

At its core, game design involves the creation of certain rules and structure intended to induce the player to accomplish a specified goal in an effective manner.

The game’s design rules are structured based on a set of assumptions as to the range of possible actions and motivations of the player; if these assumptions are incorrect, both the process (the gameplay) and the end result (satisfaction and a sense of accomplishment) are going to be in jeopardy.

At its core, the United States jury system also involves the creation of certain rules and structure intended to induce the juror to accomplish a specified goal (a fair verdict) in an effective manner (the jury process).

Lawyer: “About how many times had you ridden in a car before?”

Witness: “A lot?”

Lawyer: “I’ll accept that.”

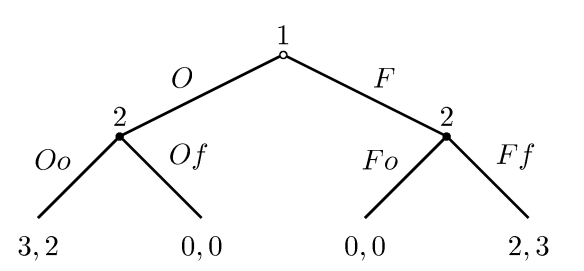

The first game system the jury system indulges in – and, obviously, I am using the term “game” in the sense of game theory – is the actual jury selection.

For those not familiar with the American system of jury selection, while there are variations from jurisdiction to jurisdiction, the way it roughly works when a jury needs to be called up is like this:

- Your name is selected at random from the lists of voter registration and (sometimes) Department of Motor Vehicle records.

- You are then on-call for a certain period of time. Huge numbers of potential jurors are called up, so if they successfully get their 12 jurors plus alternates, anyone left over gets a pass for the next year.

- As potential jurors come closer to the front of the line, they will be physically called into a waiting room. Often, after a few hours of just sitting there, the jury is selected and again, they dismiss everyone remaining.

- When you get to the head of that line, you are called in a big batch into the courtroom.

- Potential jurors are called up and questioned by the lawyers and (sometimes) the judge. A prospective juror can be dismissed for cause (you loudly proclaim that you are a horrible racist and could not possibly be objective) or as part of a limited number of peremptory challenges each lawyer has.

- If you pass all these questions, congratulations, you are seated on a jury.

- (Unless the two sides settle before the case even concludes, in which case you sat there to no purpose other than to serve as a pawn in a game of Lawyer Chicken.)

So let’s take a look at this from the perspective of principles of good game design.

For a jury trial, the goal is to provide the “deciders of fact” (the jurors) the necessary information to make a reasoned choice. Like a video game, the input – that is, the information you have at your disposal – can be relied upon to heavily influence the final decision – that is, the “player” action.

The first step of achieving this is to find jurors who are (1) representative of the community, and (2) sufficiently unbiased enough that they could theoretically decide either way.

And here’s where the jury system design starts to spring leaks. Any final jury seated is in no way representative – the way the system is rigged, it can’t be:

- Have a dependent and don’t have alternative care for them? You’re out.

- Don’t vote? Yeah, you probably won’t even be called up in the first place.

- Possess any subject-area knowledge of the case area? Yep, you’re out because you actually…have experience in the area? What? So a judge isn’t disqualified from a case on patent law because they know patent law, but a medical professional would be disqualified for a jury because they know something about medicine. (Yes, I understand the theory behind this. It’s a fairy-land theory suitable only for works of fiction. Bad fiction, at that.)

- Demonstrate any strong opinion about anything during the juror interview? Out. Can’t have that.

- Willing to lie to get out of it? Out. (Probably)

- Can’t financially support the time off work. Out as well, though in fairness, you really have to be a hard luck case for this to fly. Still, it means that being poor can essentially by itself exempt you. The “pay” they offer is laughable and wouldn’t support a bad panhandling mime.

- Lawyer for either side suspects your class, profession, race, gender, or age is statistically more likely to vote in a way they won’t like. Gone.

So much for any hope of “representative”. Failing grade on first leg of the game design. Er, jury design.

What about the “sufficiently unbiased” criterion? Well, there’s a time-honored method for this: They ask you if you can be unbiased.

There’s a problem with this, though. It doesn’t work. As in, a double-blind study analyzing this very thing demonstrated that a potential juror’s response on this is literally as reliable as flipping a coin.

(In fairness, it could be worse; for similar studies about competence and confidence the finding was that for most of the curve, the more confidence someone evinced, the less competent they were statistically likely to be.)

In game design terms, this is like putting a player through an extensive dialog tree where the player has to respond in variable ways…and at the end of which, the responses are tossed and a completely random result is selected instead.

Lawyer: “Sir, what do you do for a living?”

Me: “I’m a video game designer.”

Lawyer: “So, what kind of games do you work on?”

Me: “The same kind of game as World of Warcraft.”

Judge: “I play that. But my character is much better looking than me.”

Even worse, because of the lawyers’ peremptory challenges – those dismissals by the lawyers where they do not need to show cause – the jury is going to be representative of how well the respective lawyers play chess.

(Now, there are some jurisdictions where peremptory challenges have been significantly curtailed, but these remain a minority of jurisdictions.)

So, if that addresses the inherent problem of seating an impartial jury, what about the actual process?

The jury is generally recognized as the “finders of fact”, as opposed to the judge who is the “finder of law”. Meaning, the jury is responsible for determining what happened, what witnesses are credible, while the judge is responsible for managing the process – what evidence can be shown, what questions can be asked, and so on.

While there are (rare) exceptions to this, generally a jury can’t ask questions of witnesses. Can’t ask for professional legal clarification of the law from the judge. Can’t do their own research (though of course, the judge can). And, most insane of all, can only operate within the set of charges determined by the prosecutor.

That last doesn’t sound that unreasonable, right?

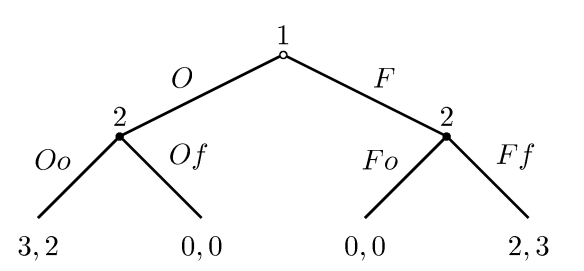

But it is. Closed-door deals, prosecutorial political ambitions, and such effectively give some people get out of jail free cards no matter what their degree of culpability and, worse yet, can subject the system to a game of what I call “Lawyer Chicken” where each side tries to bluff the other into folding rather than subject the case to the “whims” of a jury.

Witness: “I heard a noise.”

Lawyer: “What kind of noise?”

Witness: “Boom.”

In the case of the trial I was on, the charge was second degree murder on a case of vehicular manslaughter. The jury did not have the authority to say, “This doesn’t meet the level of second degree murder, but it does meet the level of vehicular manslaughter.”

Nope. The prosecutor wanted to appear tough on crime, so literally gave the jury the choice of giving a guy who had hugely fucked up, but still due to terrible judgment rather than malice, decades in prison…or letting him completely off the hook.

The prosecutor knew no sane individual would want to let the guy off the hook completely, but she also knew that most juries would go for the charge that, well, fit the actual letter of the law. So she took it off the table to offer the jury a devil’s choice.

(As a side note, I found out later the judge could have overruled this, but as a matter of process, this is apparently rarely done.)

In game design terms, the jury trial system is effectively cheating the player (the juror) – by giving them insufficient information and inadequate control over the inputs of the system to make a reasonable choice. The result is not only bad decisions (way more of a problem in a criminal trial than a game, too), but also risks the danger of nurturing a sense of disillusionment over the trial system.

Finally, there is what I call the system of “Institutionalized Fictional Perjuries”.

These are the fictions in the form of code phrases that everyone in the room knows are complete and utter nonsense, but are perpetuated in order to fit certain legal fantasies.

For example, in the case of this trial, not surprisingly a number of cops were brought up and conversationally asked, “How did the defendant appear?” Every. Single. Answer…was identical. And, not surprisingly, matched precisely the legal definition of the signs of drunkenness.

(Here I feel obligated to note, yeah, it was obvious the guy was plastered, but the fiction of pretending the officers were actually giving their honest impressions in their own words when their words were identical and matched the prosecutor’s needs was…cloying.)

Another example in much the same vein: Legally, you can’t refer to written material that has not been subjected to the rigorous demands of evidence. A reasonable point of process, but one that is completely abused when, again, Every. Single. Witness….would say the code phrase, “If I might refer to my notes to see if that refreshes my memory?” Right. That’s what you’re doing. Uh-huh.

(I swear, if they had let us bring alcohol in, we could have made a brilliant drinking game out of this. One drink for every time the prosecutor came up with an excuse to show us the picture of the body. One drink every time someone had to “refer to their notes”. One drink every time an officer “in their own words” gave the precise legal definition of being drunk. Lots more where those came from, too.)

Lawyer: “Do you know what a red light means?”

Witness: “Um, stop?”

One final example – the linguistic abuse of the word “hypothetical”. Again, because of obvious legal requirements, you aren’t supposed to say things you can’t technically know – like whether this particular person could have X number of drinks over Y number of hours before being drunk.

Apparently, however, you can do exactly that, as long as you omit the person’s name but put the word “hypothetically” in front of it. That is, “Hypothetically, if a 182 pound man who was 5’9″ tall and 34 years old with brown eyes and no beard were to…” – that’s apparently legit.

For me, the supreme irony in this comedy of process was that the entire trial was a question of recognizing consequences – the crux of a second degree murder charge – and yet we, as the jury, were forbidden to consider the consequences of our own decision (20 years to life or letting him go free because of improper jury agency.)

In game design, the key is to give the player real choices with comprehensible inputs and real consequences. When this link is broken, players become frustrated and feel like the game is cheating them. In much the same way, the jury system fails to align its choices and inputs with the consequences, leaving the result subject more to whim and court politics than to the intrinsic promise of justice the system is supposed to deliver.

Oh, one last thing. To answer the inevitable question of what happened at the trial:

After two weeks of deliberation, it ended in a mistrial – basically the game industry equivalent of spending $10 million on a game only to cancel it a week before launch.

Apropos, I must say.

In 2001, I published Wight.

In 2001, I published Wight.